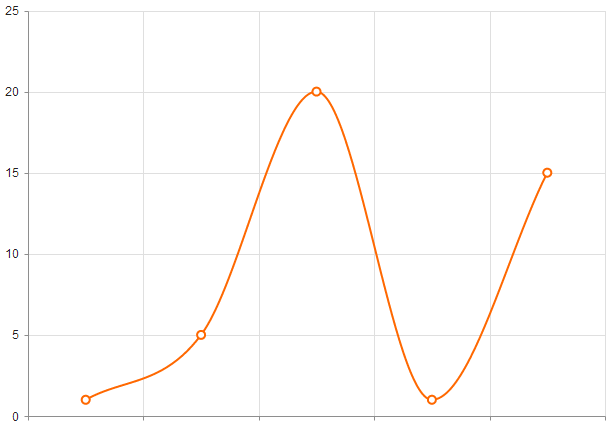

Slightly different to before, because the Moving_average = (cumsum_vec - cumsum_vec) / (2 * span) Return np.convolve(arr, np.ones(span * 2 + 1) / (span * 2 + 1), mode="same")ĭef smooth_data_np_cumsum_my_average(arr, span): # reaches beyond the data, keeps the other side the same size as givenĭef smooth_data_np_average(arr, span): # my original, naive approach # The "my_average" part: shrinks the averaging window on the side that Re = np.convolve(arr, np.ones(span * 2 + 1) / (span * 2 + 1), mode="same") def smooth_data_convolve_my_average(arr, span): The lower, the better the fit will approach the original data, the higher, the smoother the resulting curve will be. arr is the array of y values to be smoothed and span the smoothing parameter. I tested many different smoothing fuctions. I pass these into a function to measure the runtime and plot the resulting fit: def test_func(f, label): # f: function handle to one of the smoothing methods Y = np.sin(x) + np.random.random(size) * 0.2 I generated 1000 data points in the shape of a sin curve: size = 1000 Moving average methods with numpy are faster but obviously produce a graph with steps in it. FFT is extremely fast, but only works on periodic data. Savgol is a middle ground on speed and can produce both jumpy and smooth outputs, depending on the grade of the polynomial. Kernel regression scales badly, Lowess is a bit faster, but both produce smooth curves. *savgol with different fit functions and some numpy methods I will also look at the behavior of the methods at the center and the edges of the noisy dataset. This Question is already thoroughly answered, so I think a runtime analysis of the proposed methods would be of interest (It was for me, anyway). Yhat = savgol_filter(y, 51, 3) # window size 51, polynomial order 3

#FITYK GETTING SMOOTH LINE INSTEAD OF ZIGZAG LINE CODE#

To adapt the above code by using SciPy source, type: from scipy.signal import savgol_filter Fortunately, the Savitzky-Golay filter has been incorporated into the SciPy library, as pointed out by (thanks for the updated link). UPDATE: It has come to my attention that the cookbook example I linked to has been taken down. Yhat = savitzky_golay(y, 51, 3) # window size 51, polynomial order 3 Note: I left out the code for defining the savitzky_golay() function because you can literally copy/paste it from the cookbook example I linked above. See my code below to get an idea of how easy it is to use. It works great even with noisy samples from non-periodic and non-linear sources.

This continues until every point has been optimally adjusted relative to its neighbors. Finally the window is shifted forward by one data point and the process repeats.

It uses least squares to regress a small window of your data onto a polynomial, then uses the polynomial to estimate the point in the center of the window.

0 kommentar(er)

0 kommentar(er)